Problem:

Users choose not to activate a system feature or not to provide some data input which may impact the accuracy, efficiency, or effectiveness of the system. They want to be warned when this will have an effect.

Solution:

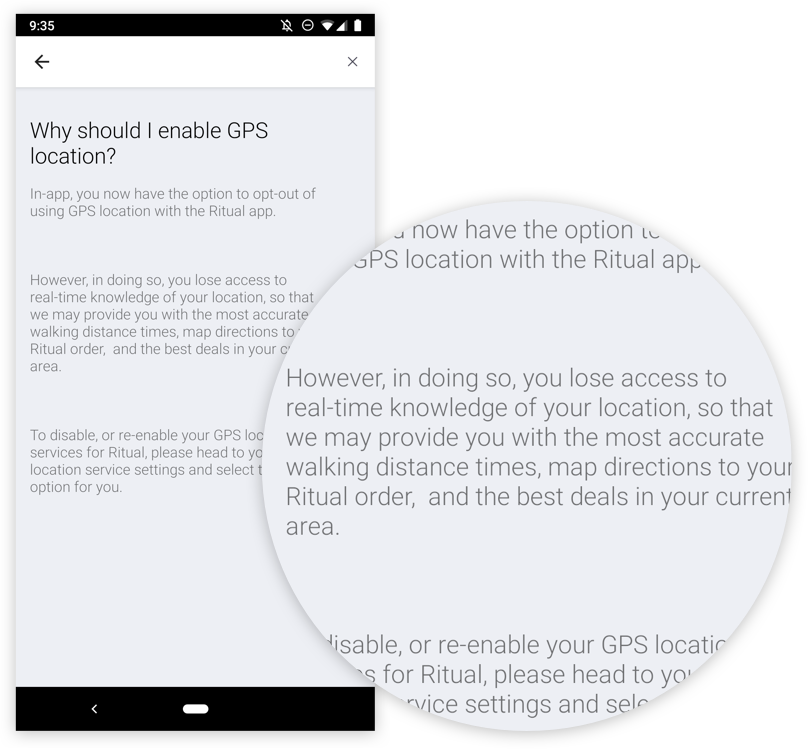

The system warns the user that deactivating a system action, dismissing data, or failing to provide data may have an effect on the outcomes of some actions.

Discussion:

Of course, we can design the system to warn the user that it needs data for accuracy when in fact we would like to (also) capture the data for other purposes like customer analysis or marketing leads, so this pattern is open to misuse if used in bad faith. Many patterns like this rely on honesty and appropriate use— lies of omission can easily turn otherwise beneficial patterns into coercive dark patterns.