Problem:

The system wants to block bots from using it, and so deploys various detection techniques like CAPTCHAs or behaviour analysis to test for bot usage. Some users have visual or cognitive impairments so find CAPTCHAs hard to use, or have behaviour that might not match what the system considers normal. These users do not want to undergo arduous tests and do not want to be mistaken for bots.

Anti-pattern response:

The system presents a CAPTCHA to the user that they can’t solve. Alternatively, it misidentifies their behaviour as that of a bot, and blocks them from using it.

Discussion:

Over the last twenty years, bad faith actors have created ever more sophisticated bots to fool systems into thinking that they are legitimate human users, capable of reading CAPTCHA text, identifying images, and mimicking natural human mouse movement and typing. In this battle to protect themselves from such bots, the bar is raised ever higher when it comes to humans proving their humanity. While we once thought our biggest technological challenge would be for AIs to convince us humans they were one of us — passing the Turing Test — the everyday concern for most of us is the reverse — convincing the dumb AIs we already have that we are indeed human. We are the ones being tested.

For some of us, this is a minor annoyance at worse. For others, it can render systems entirely unusable (e.g. a user with sight impairments is not able to solve a visual CAPTCHA, and no fall-back is provided). Even when these systems work correctly, if there is additional effort required by users to strive to prove their humanity, this can be literally dehumanizing, failing to treat such users with the dignity they deserve.

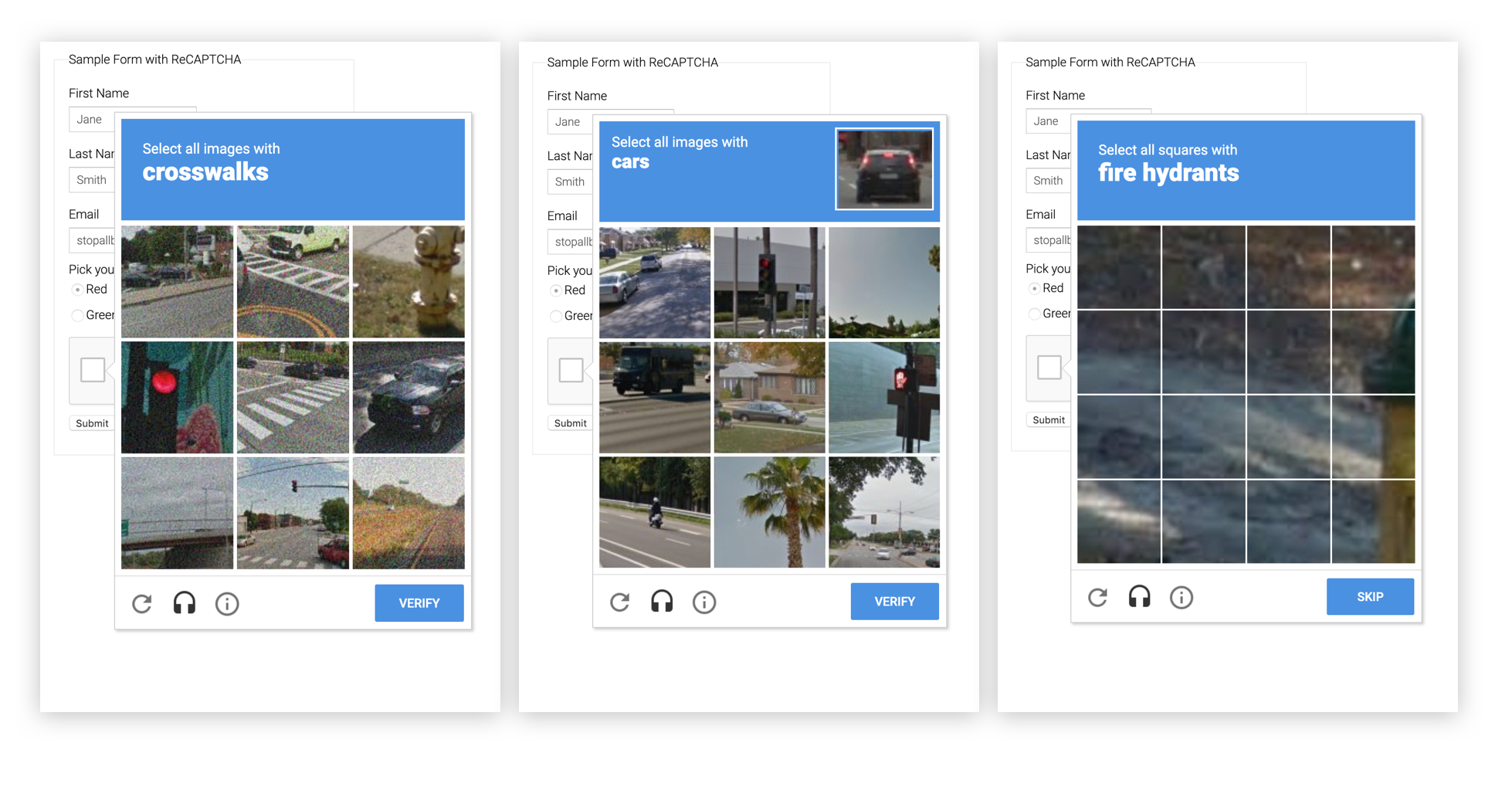

This is a well known issue, and solutions are always in development, such as the evolution of Google’s reCAPTCHA API. As is widely adopted, v2 introduced the simple one click “Are you a robot?” checkbox for many users (with the picture matching for others), and v3 goes further to become invisible to the user. Behind the scenes, this requires trawling a huge amount of information about your browser set-up, page use, and other user actions, which combined with the massive amount that Google already knows about individual users can result in the system identifying bots without explicitly testing the user. Ultimately, the problem of bots is not going away, so the privacy concerns must be weighed against the benefits of frictionless and accessible validation.