Problem:

Users want to know if they are interacting with a human or with an AI. For example, where a customer service chat interface can be handled by either a human agent or a bot, the user wants to know which one they are talking to.

Dark Pattern Response:

The system presents a chat window that is seemingly helmed by a human agent, but behind the scenes is managed by a chatbot. Alternatively, an AI-powered voice interface masquerades as a human.

Discussion:

For the sake of presenting a professional image, it can be appropriate for a chat interface to be styled with a fictional human character (e.g., a photo and human name). Often initial interactions will be handled by scripted responses, to be seamlessly handed off to a human agent as needed. In such cases where either a human agent or a chatbot takes on a character, many users intuitively understand that the entity behind the mask may not match the identity presented. Given the terse and often scripted nature of chat interactions, it can genuinely be challenging for a user to determine whether the other party is human or machine.

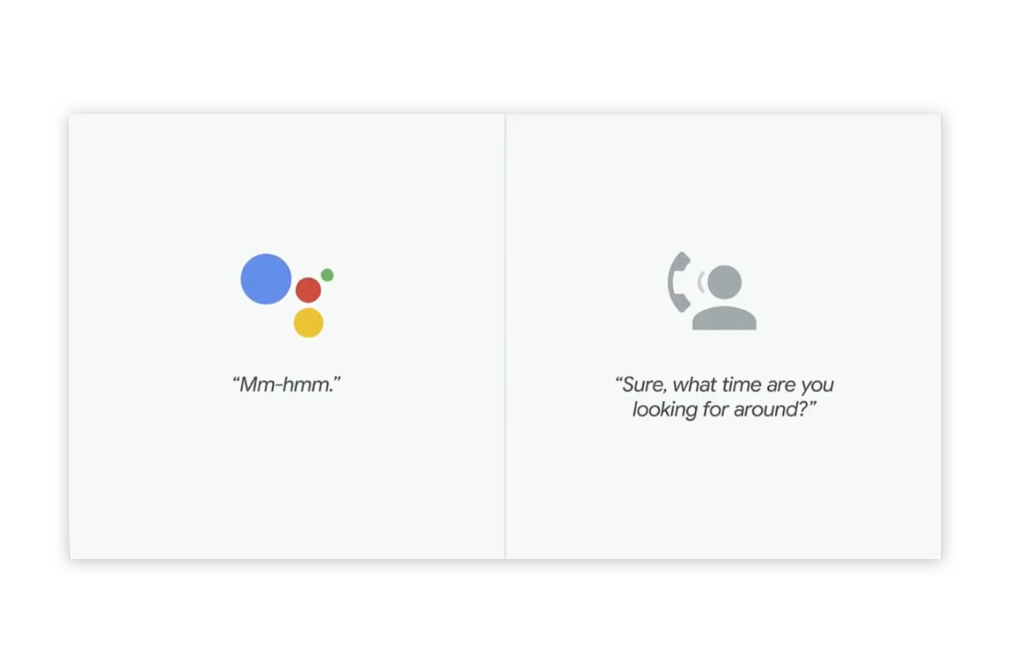

And sometimes the deception is more deliberate. In the case of Google’s Duplex voice assistant, for example, it was designed to sound as human as possible, with built in umming and erring.

Given this, it's important that these systems disclose themselves, either upfront or when directly asked by the user. If a chatbot maintains that it is a human agent, and the user believes that, then yes, it passed the Turing test but at the cost of further eroding our trust in the honesty of AIs.