Problem:

Users who are deaf or hard of hearing want to use sign language to interact with a system in a similar way to how hearing users use a voice interface.

Solution:

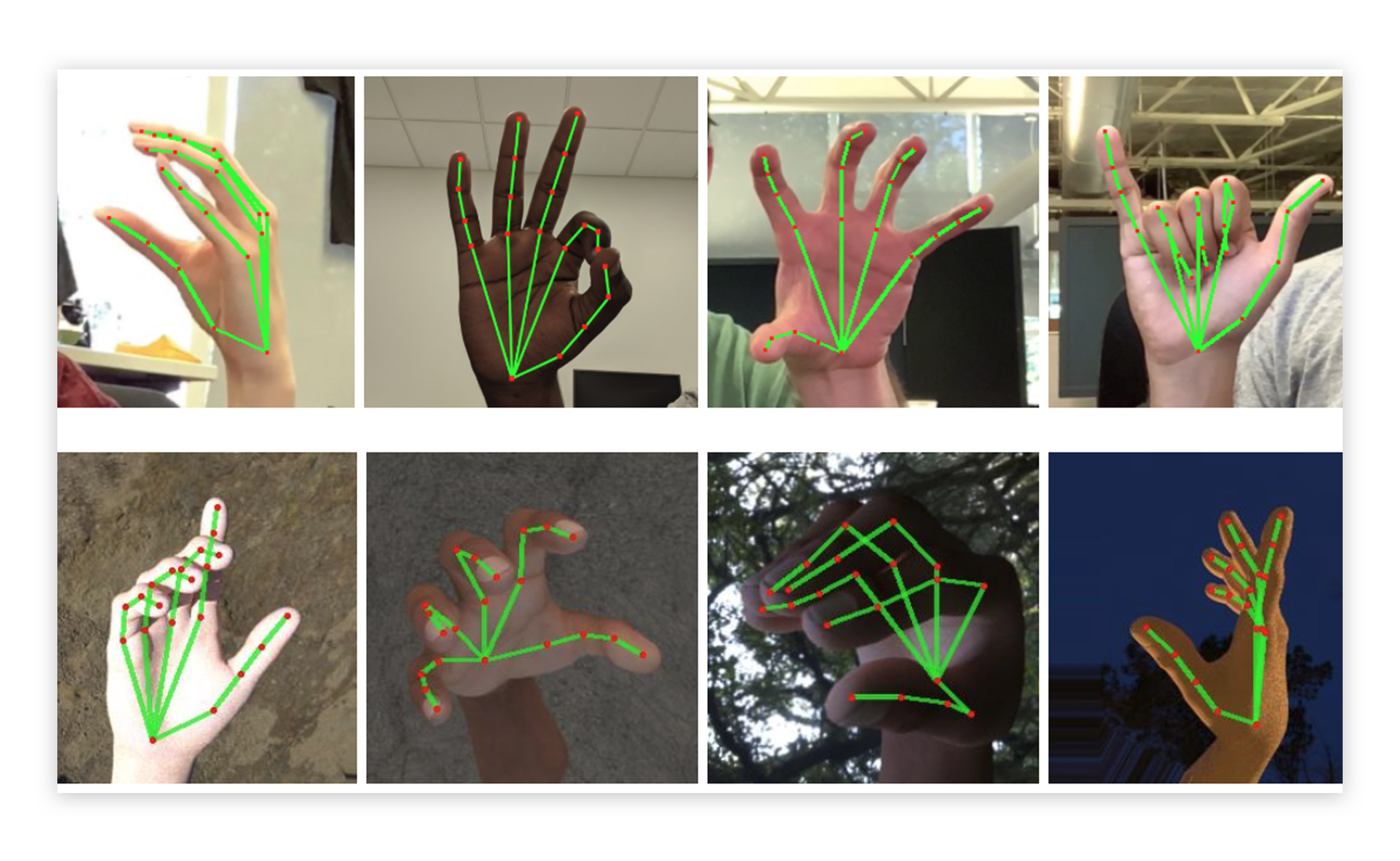

Using video capture and pattern recognition, the system allows the user to communicate with it via sign language. This can be used to issue the system commands, as per a typical voice interface. Alternatively, this can translate sign language into other formats, allowing the user to communicate with other users via text or speech, for example.

Discussion:

When we think of machine translation, we typically picture one language translated to another via text or voice, but there are of course many other ways to communicate. While many sign language users can use other input methods, being able to communicate with a system through their preferred language allows them the same efficiencies and quality of experience as non-deaf users.

Traditionally there have been complexities around machine translation of sign languages such as understanding how the syntactical structures are different from spoken languages. But as advancements in AI unlock the capacity to use signing as input, we should challenge ourselves to consider a range of viable input methods, rather than privileging some and sidelining others.