Problem:

Users want to know when real AI is deployed and when it's not. They especially don't want to be tricked into believing AI is used to process data when it's actually a manual process with human agents acting behind the scenes.

Dark pattern response:

Whether via a non-learning algorithm masquerading as one capable of machine learning, or via human processing, the system pretends it's using AI when it really isn't.

Discussion:

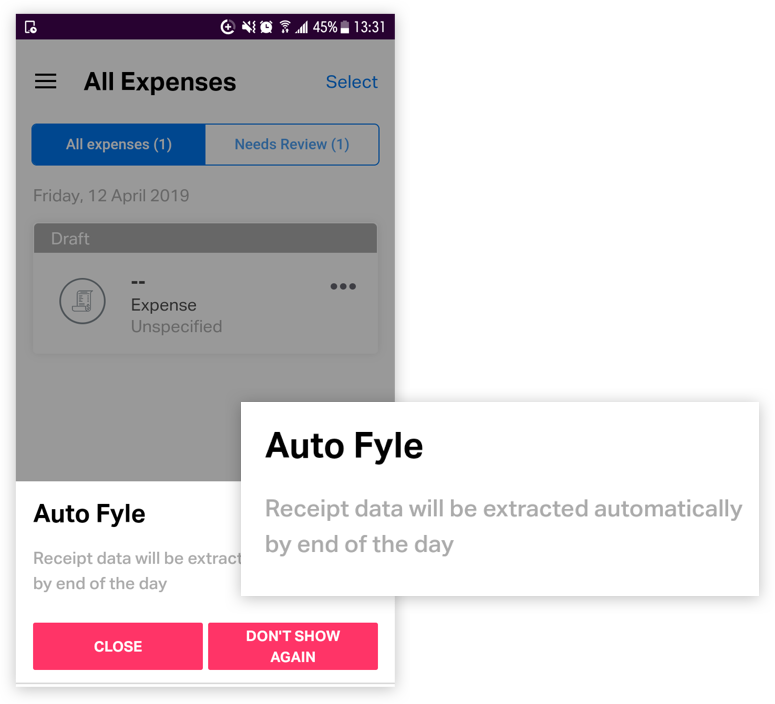

Users are increasingly literate in what AI-applications are capable of and what they cannot reasonably achieve. So if, for example, your app claims to have advanced optical character recognition (OCR) on borderline illegible items but a twenty-four hour turnaround time for processing, then a savvy user will immediately be suspicious that all is not as it seems and perceive that as a betrayal of trust. The end-user would probably not mind the difference between the two (human intervention vs AI) as long as the expected end result is achieved in a timely fashion. And if, in the example given, a twenty-four lag really is required for AI processing, then designers should anticipate the user's suspicion and address it via Setting Expectations & Acknowledging Limitations.